About

Falcon-H1-Arabic

Falcon-H1-Arabic is a complete departure from traditional large language models.

Built on a new hybrid Mamba-Transformer architecture, Falcon-H1-Arabic represents a significant evolution of the Falcon H1 family, combining higher accuracy with greater efficiency.

It was designed specifically to deliver state-of-the-art Arabic language understanding, reasoning, and long-context performance.

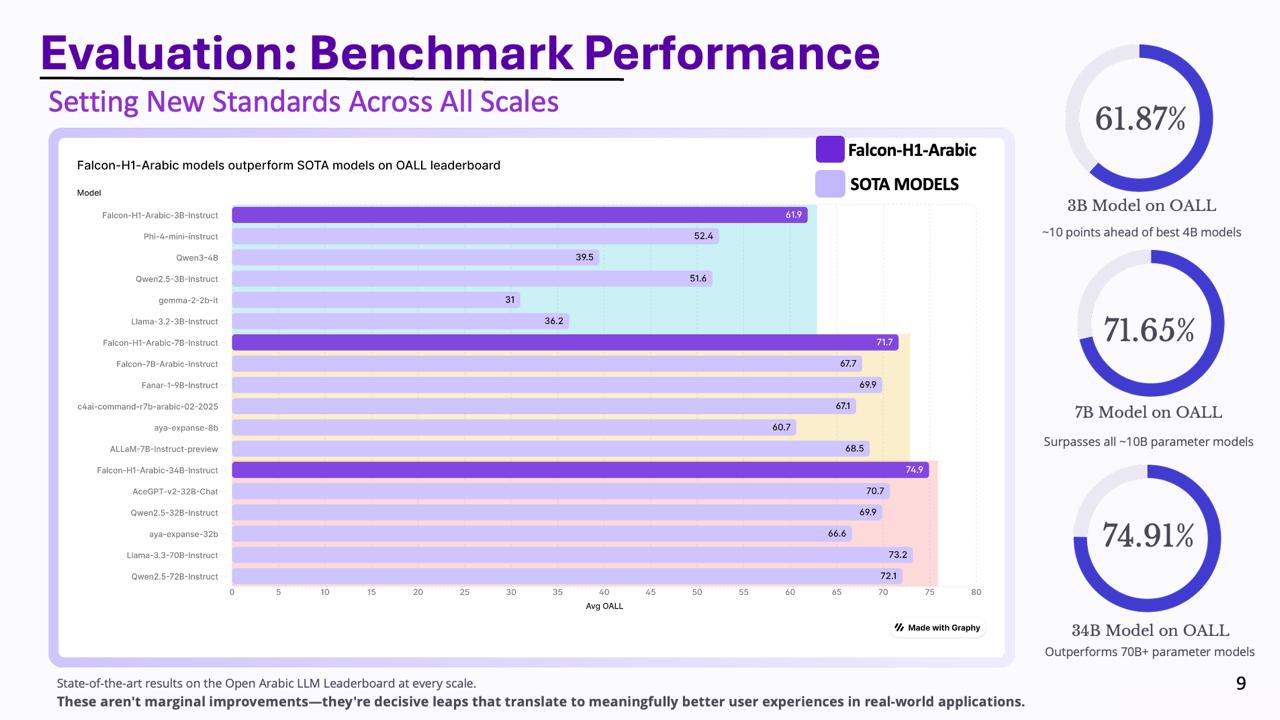

Falcon-H1- Arabic sets a new global benchmark for Arabic AI, achieving leading performance on the Open Arabic LLM Leaderboard (OALL).

Proof Points:

Purpose-built Arabic AI designed specifically for high-quality Arabic language understanding

The first Arabic LLM with a Mamba-Transformer hybrid architecture for higher accuracy and efficiency

Trained on native-Arabic datasets without reliance on machine translation

Supports Modern Standard Arabic and dialects, including Gulf, Levantine, North African and Egyptian

Leading global open-source AI model

Multingual model supporting 18 languages

Available in flexible model sizes for varied infrastructure and compute environments: 3B, 7B and 34B.

Supports context windows of up to 256k tokens equivalent to ~ 100k words

Performance Benchmark

We purpose-built Falcon-H1-Arabic to support the linguistic and cultural complexity of Arabic across real-world applications.

Major Improvements In:

The Falcon-H1-Arabic Family is available in 3B, 7B, and 34B parameter variants for flexibility across a wide range of infrastructure and deployment needs.

They may be small, but they outperform the mighty:

3B model / 01

Outperforms the best state-of-the-art models with more than 10 points on OALL benchmark. The model is suitable for light-weight applications and agentic AI.

7B model / 02

Surpasses all <10B models on OALL benchmarks. Provides a good tradeoff between efficiency and accuracy

34B model / 03

Outperforms even 70B+ parameter systems on Arabic language benchmarks

High-quality Arabic AI doesn’t need excessive model scale or infrastructure overhead

For

Long

Context

Falcon-H1-Arabic supports context windows of up to 256K tokens, processing and reasoning across large volumes of Arabic text in a single interaction. Users can analyze lengthy legal documents, medical records, academic papers, or enterprise knowledge bases without losing coherence or continuity — a capability previously unavailable at this level for Arabic language models.